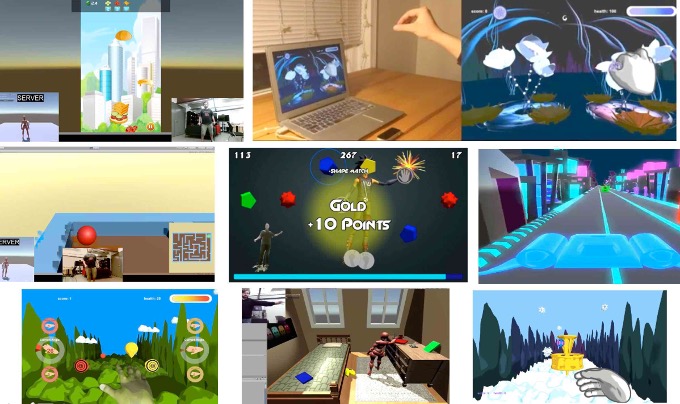

The enAblegames Libraries enable creating fast, easy, and low-cost fitness and therapy games

enAblegames was designed as a life-long evergreen home or clinic gaming platform for therapy and fitness based on one simple fact: people get bored playing the same games over and over again. In order to be a viable platform, we had to lower the time and cost of adding new games to our system so that new games can be added in hours or days rather than months or years. The key differentiator between enAbleGames and existing customizable AVGs is that enAbleGames platform is built with a flexible, modular architecture that can be adapted as patients (and technologies) progress. Our platform includes

1) enAblegames Libraries to make new or adapt/calibrate existing games as enAbleGames’ AVGs

2) Patent-pending SUKI body-tracking system to translate body motions to game input

3) cloud-based web portal to record player performance and give clinicians remote access to games

4) enAbleGames Digital Body Replayer (DBR)

The enAblegames plaform components are detailed below:

The enAblegames libraries can quickly (in a matter of minutes or hours) be added to an existing game and convert it from original inputs (keyboard, mouse, controller, or tilt/touch) to body-driven inputs (body tracking or robotic inputs).

Our game libraries are written in C# in using the Unity3D engine, and have need to access the data stored in our database through the REST API endpoints provided by our backend server app. We use these tools to make calls to our server to upload data generated during the session, read data like player parameters, and connect to the platform for real-time features (e.g. remote control).

We have only produced 35+ games to date due to our focus on creating this flexible architecture and validation of our games through trials, our API’s design permits us to create new games, repurpose existing games, or provide our libraries to 3rd party developers to support a web-portal with eventually hundreds of games and activities that support a variety of therapies and conditions. One of our games was translated from a traditional mobile version into an enAbleGames’ game in only a few days and for only a few hundred dollars, and college students have added new games using the libraries as part of a 3-week class project. This presents a strategic advantage over competitors where each game has a long and costly development cycle, yet only supports one therapy. enAbleGames intends to release new games each month.

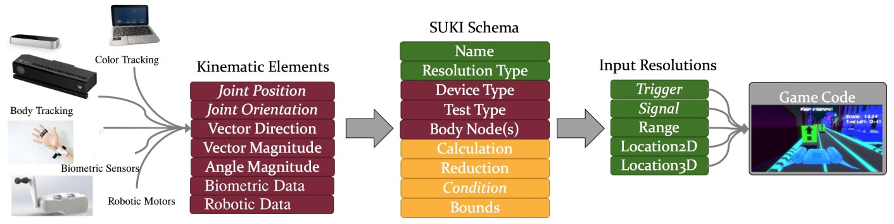

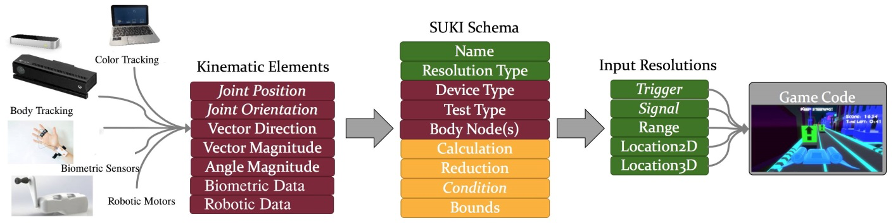

SUKI is a patent-pending framework by which:

SUKI provides developers with a library of abstracted inputs that can be programmed into the game, which are then attached to concrete inputs at runtime, as specified by an external configuration file. This allows the same game to interpret user arm motion, elbow flexion, torso leaning, and any other body motion perceived by the sensor to be interpreted as the target input that controls the game elements or character. When a new patient with a new therapeutic goal uses the game, simply specifying a local configuration file external to the game enables the same software to meet their particular desired motion without editing or recompiling the code or requiring the expertise of a software programmer. This system can also be used to monitor constraints such as posture or correctness of movement so the game can respond accordingly.

Using SUKI, we have created a system where each game can be configured at runtime to be controlled by dozens of player movement profiles such as shoulder or elbow rotation, stepping, head movement, or the one of a half-dozen balance profiles currently being tested on adults with Parkinson’s. If a new movement profile is desired, such as using the knee, this could be added quickly (in minutes) from scratch without having to change a single line of game code.

While enAbleGames initial release of games is on Windows PCs and uses the Kinect, our architecture supports future porting to support other platforms including mobile-base VR, AR, and supporting newer tracking technologies. enAbleGames supports a variety of player tracking devices beyond the Microsoft Kinect which had an installed base of nearly 40 million existing devices already in customers’ homes. There are a variety of Kinect-like 3D cameras using a common standard from companies, and enAbleGames supports the Orbbec Astra line of 3D sensors (under $150) as well as using an iPhone or iPad for body tracking, and soon support for a simple webcam.

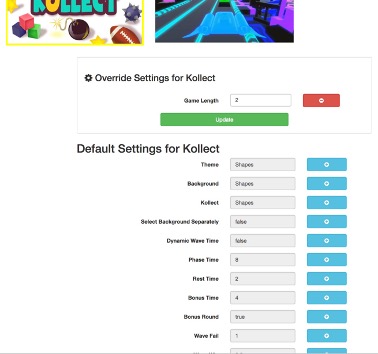

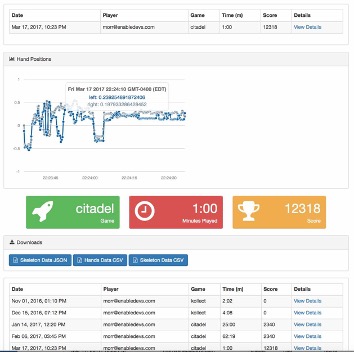

enAbleGames is not simply a platform to build games; it is a full end-to-end solution for making games that integrate with our cloud-based web portals for remote monitoring, control, and customization based on individual game and player profiles. The game development team has created front-end remote features so that the web portal and game dashboard is functional for the therapist and patient. Currently, players have the ability to access their specific, customized game in their home exercise programs and therapists can configure game parameters and monitor player data in real-time (clinic) and post-use (home). Adjustments made to the game parameters by the therapists via the web portal are applied to the game through the same web API, so that patient profiles are created and changes will be saved for future game sessions. The back-end server stores this information in a database, where a front-end web application enables therapists to remotely and securely access this information, along with visualizations of the data, to inform decisions regarding plan of care and dosing and progression of AVG intervention sessions.

|  |

| Remote Game Paramter Adjustment | Patient Session Visualization |

For our architecture, we use a standard web MEAN stack (MongoDB, ExpressJS, AngularJS, NodeJS) implemented on Microsoft’s HIPAA-compliant cloud platform, Azure. A NodeJS server application sits at the center of it all, and within this application (the backend server) we leverage the popular ExpressJS library to handle our routing for our REST API and seek to maintain 100% code coverage. The server also publishes our frontend web application in the form of an SPA (single page application) AngularJS app. Data are stored in a NoSQL database, where we use MongoDB instances for local development and testing, but we leverage Microsoft’s DocumentDB protocol support for MongoDB in our production cloud. We used token-based authentication (JWTs, or Java Web Tokens) to authenticate API calls and track users between calls.

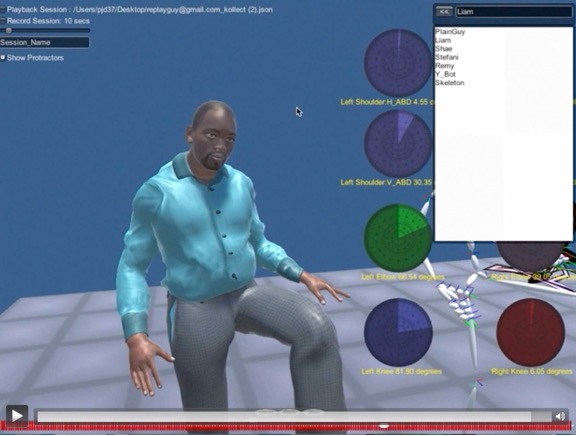

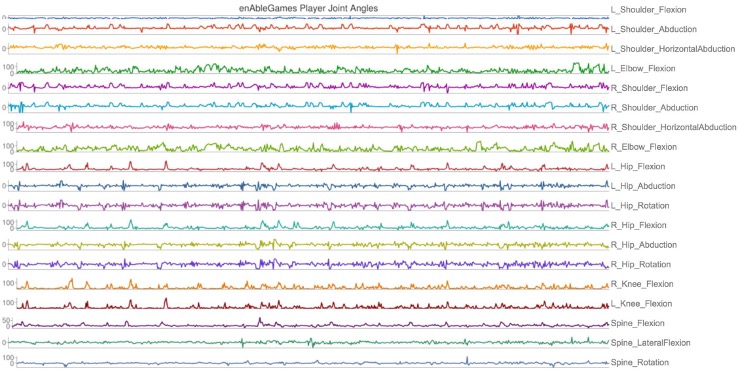

While graphs and summaries can provide some needed metrics of evaluation, they are often not intuitive nor permit the therapist to see movement patterns that are not identifiable while watching a play session and these outcome data are important for insurance and clinical documentation of progress. While video recording of patients for later evaluation is technically feasible, its use for remote systems is limited due to HIPAA concerns and limited spatial information it conveys. Our cloud architecture permits the therapist to download the kinematic session data to a de-identified file for use in our Digital Body Replayer. Our DBR system uses an avatar-based recreation of the patient movements for the provider to assess general movement skills and patterns that occurred during game play. The provider currently has playback control such as adjusting playback speed or jumping in time, and full virtual camera control to observe the motion from any angle, zoom in and out, and see select joint-angle information, as well as export joint angle information (ROM) to a spreadsheet for analysis and graphing

Below is current development work that is being added as part of integration with Recupero Robotics’s therapy robots.

1 Robotic and Biometric Input Data Extensions

The patent-pending System for Unified Kinematic Input (SUKI) system which permits a game to be controlled by a variety of input devices was integrated with the haptic robot as well as with biometric sensors (accelerometers, galvanic skin sensors, and heart rate monitors).

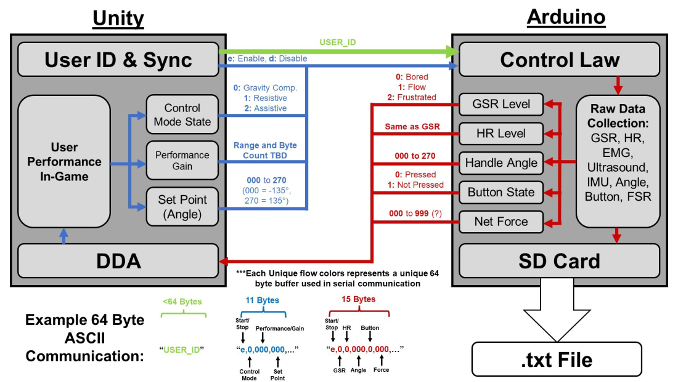

1 Serial Communication Protocol

The Unity-based games are currently deployed on a PC and launched through a central game Launcher (Fig 5). The launcher and game libraries were specifically modified for this project in order support deployment on a custom portal. Because there is a central Player login through the enAblegames Launcher app to the enAblegames portal, the login ID is encoded in a Java Web Token that is passed to the game. This player ID is then passed through the serial protocol to the Recupero Robot Arduino micro controller (Arduino). Similarly, a timestamp is recorded during the initial data transfer to synchronize the session data.

We implemented two-way serial communication protocol between the haptic robot and the Unity-based game application (Fig.6). The Arduino reads values from all sensors monitoring the player/patient and their interaction with the robot and feeds them to the Unity application as raw or modified data. Arduino also controls the force feedback to the patient. We implemented automated parameter adjustment based on game performance, motor and cognitive impairment, and biometric sensors.

1.2 SUKI Biometric and Robotic Extensions

As SUKI was designed around purely kinematic input data, the architecture was expanded to support Biometric input and Robotic input (see Fig 7). This expansion included extensions to the SUKI Schema files, which are files external to the game build that control how external data is mapped into game inputs. Through SUKI, various input signals, from joint angles to relationships between body parts to biometric signals, get mapped to standard input measurements such as bools, normalized vectors or 0-1 ranged floats, so that the game can handle all inputs uniformly. These extensions now support SingleBiometricValue and SingleRoboticValue schema metrics, and take “BiometricValues” and “RoboticValues”, respectively. While prior Schema metrics corresponded to a pre-defined set of named anthropometric values such as joint angles and locations such as “LeftElbow”, the availability of biometric and robotic data can vary and required the addition of dynamic names to the SUKI architecture. These modifications now can support extensions to process future arbitrary inputs through any connected devices.

2 Automated Adjustment to Player Capabilities

We implemented automated parameter adjustment based on game performance, motor and cognitive impairment, and biometric sensors.

2.1 Dynamic Extent Adjustment Extensions

SUKI uses extents (min-max ranges of inputs, such as joint angle Range Of Motion (ROM), heart-rate, etc.) to adapt to individual patient capabilities. A prior limitation of the SUKI system was that the dynamic extent adjustment mode (which supports dynamic adjustment of the min-max values) primarily supported improvement of motion (expanding ROMs outward) as a player reaches new physical milestones using a Weighted Average approach (SukiExtentsWA). However, it is much more difficult to know when to adjust ROMs inward dynamically, as a player’s movement might be limited by the gameplay itself rather than their physical ability. In order to solve this, we introduced a new notion of Extent Pressure which is configurable within each game through Partially Ordered Set Masters (SukiExtentsPOSM). Certain games/activities may have a high outward pressure but a small inward pressure, meaning that ROMs can expand quicker than they are expected to shrink. Switching between different Extent modes or adjusting their tolerances and pressures is now as simple as enabling or disabling them through a checkbox or adjusting slider values in the game inspector as seen below:

2.2 Haptic Biometric Based Dynamic Difficulty Adjustment (HBB-DDA)

In addition to the Dynamic Range adaptation that the SUKI extensions provide, a novel Haptic Biometric Based Dynamic Difficulty Adjustment HBB-DDA system was added which serves several functions on both the game side (difficulty and feedback) and the robotics side (adapting the assistive/resistive forces experienced by the patients). This requires flexibility to adjust either/both game difficulty as well as robotic assistive/resistive difficulty based on user capabilities (motor and cognitive), performance (e.g., game score, etc.), and therapist settings (parameters).

System Design: The HBB-Dynamic Adjustment System in the games includes two subsystems: Dynamic Difficulty Adjustment System which takes charge of adjusting the difficulty of the therapy game on the fly base on the performance of the patient for each game and with the help of the physiological data; Dynamic Feedback System is using the patient’s profile to change the feedback elements in the game including text feedbacks, UI, visual effects and sound effects. The two subsystems will work together to adjust the game to best fit itself to the patients and provide the correct amount of challenge to the patient without giving them trouble understanding the tasks.

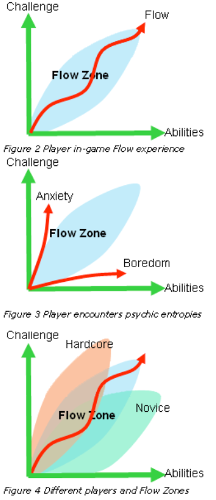

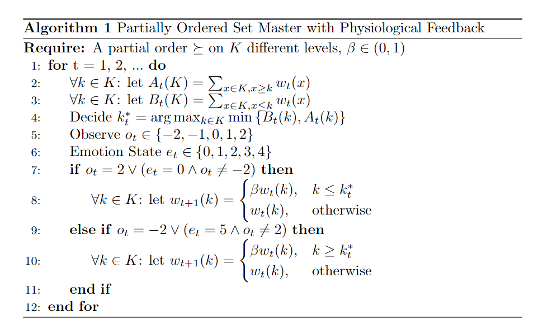

Dynamic Difficulty Adjustment (DDA): Previous research has shown that the patient’s engagement during a rehabilitation session positively influences the session’s outcome. Based on Mihaly’s Flow model, we can find out that the user’s engagement is maximized when the difficulty best fits the user’s ability, i.e., if the difficulty is too hard for the user, the user may experience anxiety, and if the difficulty is too easy for the user the user may feel boredom. And different people may have different preferences for challenges, which means they will also have different flow zones. Based on this we can also build a flow model to represent the flow zones inside a game. (Jenova Chen, 2007). We use the physiological data to figure out where the flow zone of the patient is and then keep the difficulty of the game within the flow zone for the patient. The system uses an algorithm called partially ordered set master (POSM) to adjust the difficulty on the fly. It suggests a “harder than” relationship between each difficulty level.(Missura2011) We first assign a belief vector for each difficulty level and make a prediction base on the belief vector. Then observe the patient’s performance during one game session (a level or a period), next we update the belief vector base on the observation and emotional state (based on physiological data). Below is the pseudo-code of the algorithm. Compared to regular DDA algorithms like just increment and decrement of a level based on the observation, POSM usually suggests a wider range of levels but easier levels which lead to higher engagement of the patients—see algorithm (Okzul2019).

The emotional state is calculated mainly based on the skin conductance and is corrected by heart rate data. A baseline GSR and HR data are taken prior to the game session, and during the game we look at the increase in the patients’ biometric data with respect to the baseline—below the baseline or near the baseline, we consider the sub bored and higher, we consider the patient being stressed. The HR data is used to correct the GSR data, if the two values don’t agree with each other (one goes above the baseline and one goes below), we ask the DDA only to perform on the performance data from the game, and if the two values agree on each other (both go above the baseline value), the DDA will perform on both values.

Dynamic Feedback: This system changes the complexity of the feedback the patient gets during the game session. Its goal is to notify patients of the changing of the difficulty or game settings without having them notice the existence of the HBB- DDA system. It takes in the input from the HBB-DDA system including the current suggested difficulty level and the patient’s profile. For now, the patient’s profile takes in the tolerance of the patient for text feedback, sound feedback, whether the patient is colorblind, and their initial score on both cognition and motor capabilities. The initial difficulty set by the HBB- DDA system is determined by the motor capability score from the patient profile, and some other features of the controls in the game for example the amount of pinch they need to perform to be considered a pinch. Then during the game, based on the change in the difficulty suggested by the DDA, the system will trigger different types of feedback including text feedback, visual effects, sound effects, and change in music. Section 5.0 provides game-specific details of the DDA implementation during the experiment.

3 New Games and Assessments

Along with assessment tasks, a variety of therapy games were created and adapted to be played with the haptic robot. These games include assessment-based games, a match-3 game, 2 others brought over from the enAblegames suite, and 6 new games.

3.1 Assessment Tasks and Games

Three assessment tasks (Fig 3.4: Top row) were created to test the patient’s motor and cognitive abilities. These tasks include Trajectory Tracking, Spatial Span, and N-Back testing. The Trajectory Tracking task was developed to primarily test the patient’s motor ability, N-Back for cognitive ability, and Spatial Span is a mix of both motor and cognitive. The original set of tasks were revamped and updated to include the enAblegames software and have many adjustable parameters to allow usability across a span of therapeutic needs. In the Trajectory Tracking task, the player is tasked with following a sinusoidal wave to exhibit their range of motion. Parameters such as trial length, sine wavelength, and sine frequency can be adjusted. In the Spatial Span task, the player needs to memorize the position of flashing squares to repeat a sequence. The player will move the arm to the correct position and then have the option of using a button to select or wait and hold to select their choice. Parameters include starting sequence length as well as the time it takes for a square to be selected when not using a button. The final task created is the N-Back task, where the player must remember numbers that have appeared on the screen and click the button when a certain condition is met. This task includes 0-Back, 1-Back, and 2-Back. When a number is selected, it will flash either red (incorrect) or green (correct) if it fits the predetermined criteria. We used yellow (correct) or magenta (incorrect) to accommodate patients with colorblindness.

|  |  | |

|  |  | |

|  |  | |

For each assessment task, a similar game was adapted and created so that players can continue to strengthen their motor and cognitive ability without practicing directly on the assessments themselves. The assessment games include Coin runner (Trajectory Tracking), Follow the Sequence (Spatial Span), and What’s in the Box? (N-Back) (Fig. 8 Bottom Row). Each game has many of the same adjustable parameters as the assessment tasks as well as some additional parameters for the individual games. Coin Runner is a Trajectory Tracking game where the player collects coins that are generated on the path in front of them by moving their character left and right using the Recupero robot handle. Follow the Sequence has a different look from the assessment task as well as it has the ability to have a varying number of flashing buttons and sequence length to further challenge or simplify the task. “What’s in the Box?” uses more motor ability than the original N-Back task, as well as it includes a ramp in difficulty containing varying levels of what the player needs to remember. Options such as animals, shapes, and numbers are available to the player.

3.2 Therapy Games

Along with the assessment tasks, a variety of therapy games were created and adapted to be played with the Recupero robot using the extensions to the enAblegames’ libraries (Fig. 3.5). Two games, Citadel and SkyBurger, from the enAblegames suite were selected due to their similarity in linear movement but differences in structure (endless vs. Levels) and cognitive loads (minimal vs. Pattern recognition), respectively. These games were extended with the new HBB-DDA system. Additionally, a new game, Space Ball, was created to both support the additional inputs as well as test the new HBB-DDA system.

Two other games were developed for the suite of games for Recupero’s haptic robot. The original testbed TheraPong game used in the Theradrive robot was modified to no longer be hardcoded to a single robotic input but instead use enAblegames’ libraries for SUKI, body-tracking, remote parameter, and logging support. Donut-Match-3, a CandyCrush-style Match-3 game to target a game style that appeals to a mostly female audience, was added and leverages the additional inputs (pinch and button) that were added to the haptic robot.

Although not formally declared as a goal of Phase I, the enAblegames libraries were also added to six (6) additional new games within less than 2 weeks for future patient testing on the Recupero haptic robot, demonstrating the flexibility of our approach. While not actively used yet in patient testing, they have been tested for compatibility on our Arduino test system.

4. Cloud-based support for remote parameter adjustment and therapy support

The enAblegames’ portal is run on an Azure server. Due to the long-term goals of this project, a second Linux-based server has been created to host the robotic-based game and player data, and the libraries were extended to facilitate ease of switching servers. All of the dynamic difficulty parameters that were defined for the games and tasks are defined as enAblegames’ Parameters, which are declared in external JSON files for remote setting via the web portal or through and dynamically generate an in-game menu, as well as being accessible to dynamic modification through the HBB-DDA. The default parameter file was replaced with a configurable default that can be customized to generate multiple games per project for simpler maintenance of game generation. This file is used to automatically configure the portal for remote parameter setting when new games are added.

Play Session Data Recording: EnAblegames maintains a record of player generated data to the HIPAA- compliant cloud, for performance monitoring, historic correlations, or data-mining. The backend api/session/:id endpoint, which is the way that an external client will record new metric data (such as robotics metrics) to the database under a given session, required modification to support the new robotic and biometric data.

Log Tracker Extensions: EnAblegames uses JSON-encoded logs for both local recording and server recording of input and game events. These events can be event-based logging of specific game data (e.g., player achievement, difficulty level adjustment, etc.) or Tick-based timed tracking such as player movement. All games were created with extended logs to record relevant game data for correlation of player movement, biometrics, and actions with game performance. Extensions were added to support the recording of the robotic and biometric data. The format permits extensions of the future for multiple degree-of-freedom (DOF) robots or multi-dimensional biometric data.

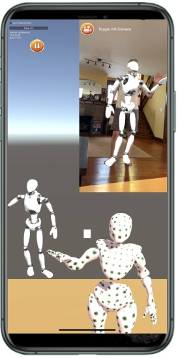

5 Body Tracking Extensions

| 5.1 Making Body Tracking Optional As player kinematic data is now optional, a connection to the body tracking server is no longer required to use the SUKI input system. Therefore, when only using Robotic or Biometric data, a timeout function was added to permit SUKI inputs even when no network is detected. In addition, the data setting function was extended to take in the time of the data to know when the data is “moving”, as opposed to the prior reliance on the body tracking indicating the player had moved. 3.5.2 Mobile Device Body Tracking Support While enAblegames supports body tracking through various external 3D cameras such as Kinect and Orbbec Astra, support of iPhone/iPad-based tracking was added by creating an iOS app, enableserver. |  |